Network quality for rocket scientists

February 14, 2022

In rocket science there is a saying; “Mass begets mass”. A heavy rocket needs a rigid structure to avoid buckling under its own weight and powerful engines to lift itself. Heavier rockets also require more fuel, and that extra fuel adds even more weight. Therefore, each kilogram of mass added to any component of a rocket adds more than one kilogram to the total weight at takeoff. Rockets are insanely hard to get right, partly because mass begets mass.

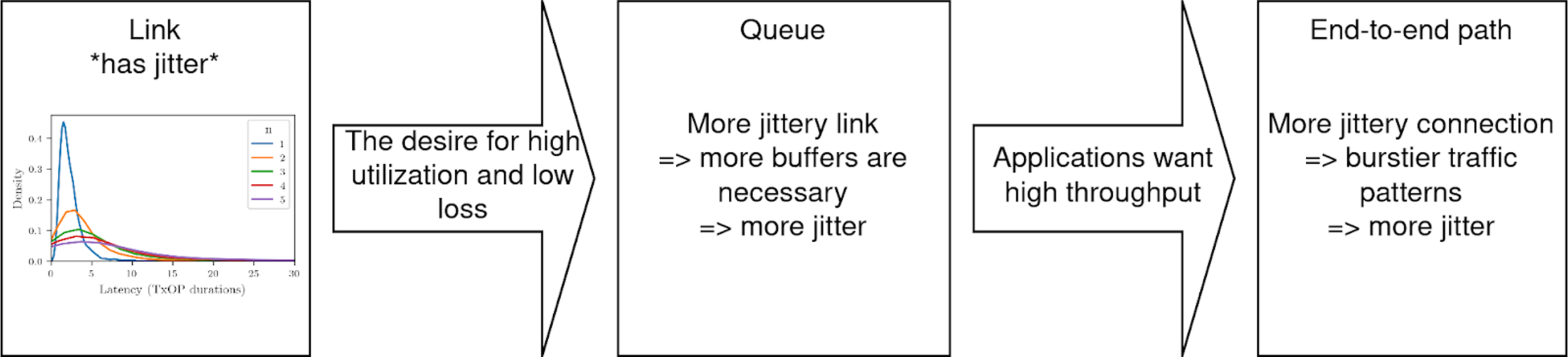

In computer networks, a similar effect occurs. Jitter begets jitter. Let me explain.

Jitter is a term used for the variability of latency. Here’s an example: If sending a single packet takes 50 milliseconds on one occasion, but the next time it takes 80ms, you might say the jitter is 30 milliseconds. There are many different ways to define jitter, but all of them capture a notion of the unpredictability of latency for individual packets. Individual links and end-to-end paths in the network can have variable latency and therefore be “jittery”.

OK, so jitter is unpredictable latency. How does that beget itself?

Jittery links require more buffers

Let’s start with a single jittery network link. When a link has jitter we do not know how long the link will remain busy serving a packet. If we assume one packet arrives every average packet service time, some unlucky packets will arrive while the link is still busy with the preceding packet. These packets get dropped and are lost forever (we’ll get to buffering later!). At other times the link will be idle because a packet is serviced faster than average, and the next packet does not arrive immediately. If we want high utilization of this link, we must arrange that the next packet arrives the moment the link finishes serving the preceding packet.

I can think of only two ways around this problem: We can use very high arrival rates and just accept large amounts of packet loss, or we can add buffers to the link. Most networked applications do not work well with lots of packet loss, so we’ll go with adding some buffers.

Like the queue at a supermarket, network buffers form queues of packets. A queue ensures that the link (cashier) does not sit idle between serving packets (customers). As long as there is room in queue, the queue also avoids packet loss. With the right amount of queuing, packets can arrive at the average rate of the link without significant packet loss and without letting the link go idle. However, there is a sweet spot for the length of the queue. Too much queuing just adds more latency without improving utilization or packet loss (this is known as bufferbloat and is much more common than you’d think). The critical observation here is this: The more jittery the underlying link, the more buffers are needed to achieve the sweet spot combination of high utilization and low loss.

So, adding buffers solves our problem, right? Unfortunately, there is one major caveat. When packets are sitting in a buffer, they rack up waiting time which adds to their total end-to-end delay. And, because queues are not always equally full, the time spent waiting in the queue can vary from one packet to the next. See the problem? Buffers produce jitter!

This is one way in which “jitter begets jitter”. The more jittery a link is, the more buffers we need to achieve the high utilization low loss combination, and each buffer we add increases the jitter produced by the queue. Building high-performance networks from jittery links is a bit like building a rocket from low-quality materials. When material strength is variable, all components must be made bigger to compensate for potential weaknesses, and this quickly snowballs into a vastly heavier rocket.

But that is not all, jitter begets jitter in more ways than one.

Unstable connections incentivize bursty traffic

When service is not guaranteed, those who can will get in line to make sure they get service. Like eager fans pitching tents to be first in line for movie tickets, or panicked people buying too much toilet paper.

Jittery connections incentivize applications to send bursts of traffic. To keep a long story reasonably short, trust me when I say that TCP throughput is highly variable over jittery links. There is a real sense in which unpredictable latency is unpredictable throughput (so this problem will not go away with QUIC or BRR). Applications with high throughput requirements, therefore, increase their peak traffic rate to hedge against throughput suddenly dropping below what the application needs for a while. This is one reason why streaming services such as Netflix and YouTube use bursty traffic patterns. In turn, high peak traffic rates increase the jitter-inducing effect of buffering, just like rush hour traffic causes delays on the highway.

When service is unpredictable there is an incentive to “get in line early”, and thus queues fill more aggressively than they otherwise would have. Again, jitter begets jitter.

Building a better internet

Just like “reduce mass” is the obvious solution to building better rockets, the obvious solution to building better networks is to reduce jitter. But that’s easier said than done.

There are ways to reduce jitter in the internet. Lots of great work has been put into reducing unnecessary queuing (aka bufferbloat), but “necessary queuing”, that is the queuing needed to maintain high link utilization and low loss, also introduces jitter. Solving the “necessary queuing” problem requires the ability to multiplex traffic over shared and unstable links while adding as little jitter as possible. Again, no easy task, but many recent developments such as MU-MIMO in WiFi 6, URLLC in 5G, and Low Latency DOCSIS (to name a few) move us in the right direction. Therein lies great potential for more reliable internet.